Do all companies deliver the best customer experience?

In this final blog in the series about “My three bugbears with some Customer Experience programmes” I will focus on the question of whether all companies deliver the best customer experience and drive maximum value to the organisation? If you missed either of the previous posts you can catch up on them using the links below:

- Part #1 – Shouldn’t customer experience measurement lead to insight and action?

- Part #2 – Why is some customer experience feedback inappropriate?

Are some companies just going through the motions of having a CX programme?

At times I feel companies ‘believe’ they must have a CX programme just because it’s what everyone else is doing. And rather than be the odd one out, without the latest ‘new gadget’, they put one together to be part of the gang. However, too often the objectives seem to miss something, or the programme is used for the wrong purpose.

For many the philosophy of capturing feedback at every touchpoint in a customer’s journey is the most important thing to do. And some think the typical NPS® question and open text verbatim is a silver bullet. Certainly lots of data can be captured, but having spoken to many brands it seems that a significant number are data rich, yet insight and understanding, poor.

To illustrate the point, back in 2013 at the Customer Experience Exchange in Holland, we held a round table with Customer Experience Directors of over 25 global bands. In the session we discussed the metrics they were using and the value they were deriving from their programmes. Overwhelmingly and unanimously they all said the same thing; they had masses of data but were struggling to make sense of it or link it to financials, or outcomes. What was really surprising was that they thought they were the only ones in this predicament!

So has anything changed in customer experience programmes over the past 5 years?

Five years on and I’d question whether much has fundamentally changed. I’m sure some have and more value is being derived however, I still see programmes following the same pattern and often with questionable methodologies. CX programmes often require significant investment and, unless they effect change or improvement, are just ‘measures’. For programmes like this I seriously question the value or return on investment.

For me, a programme that is there just for the sake of it, or sets out with the wrong methodology, or asks the wrong question at the wrong time, totally devalues the programme and can annoy the customer.

So where’s my evidence that some CX programmes haven’t changed much in 5 years?

Well firstly the proliferation of ‘touchpoint’ text surveys when interacting with a brand. I don’t know about you, but on occasions I’ve had four or five from the same company for different interactions in as many days. And secondly I want to highlight a very specific example of an interaction I had recently with an organisation. To save blushes I won’t physically name the company because that would be unfair, but you may hazard a guess, as it is a limited market.

An example of an interaction with a well known brand and their CX programme

Choosing the package

About 2 months ago I decided it was time to upgrade my television package. I did some research on the internet, looked at price plans, looked at packages, and logged in to my account. I then made contact with the organisation by telephone because I didn’t really understand my options or which package to choose.

Again the telephone operator was pleasant and helpful. However I still didn’t really understand the options, but I made a decision based on the information I had. The order was placed and an engineer booked for 3 weeks’ time! Not ideal but I had little choice.

However after a couple of days reflection and further discussion with my wife, we decided to change the order and upgrade to the higher spec package.

Changing the order

So I called the organisation and explained we now wanted to change our order. The chap at the end was helpful and said “yes, no problem, however I’ll need to book a new engineers appointment“! This was a bit of a surprise as all I was doing was changing from one product to the next one and there was still 2 weeks to the original installation date.

After much ‘discussion’ the chap said “don’t worry we’ll keep the original appointment but I need to give you a new order number”. My instructions were to give the engineer this new order number when he arrived. There wouldn’t be a problem as engineers carry extra products on the van. So I left the call content in the knowledge everything would be fine.

The installation

So, two weeks pass and I wait at home for the engineer. He arrived and I explain I’ve changed my order and give him the new order number. He needed to contact the office to get the new order activated and the old one cancelled. It wasn’t straight forward but eventually he succeeds after several ’round the houses conversations’ with office staff.

He installs one of the products I’d ordered but when finished I said, ‘what about the other one‘? Unfortunately my new order didn’t have both products, only the one I’d upgraded. And because he’d closed the original order to use the new job number, he now wasn’t able to install the second product, because it no longer existed! Roll on two hours, and having been passed around multiple internal departments, he finally managed to install the extra product.

The result? A job that, he said, should have taken him 10 minutes, took him 2 and a half hours, and all because of internal process bureaucracy/failures.

The engineer himself was fantastic, I can’t fault him; he was creative, tenacious and understanding. The process and experience on the other hand was appalling, but I thanked him for his effort and perseverance.

At this point the subject of customer feedback came up, and said I was looking forward to providing feedback on my experience!

The customer experience programme

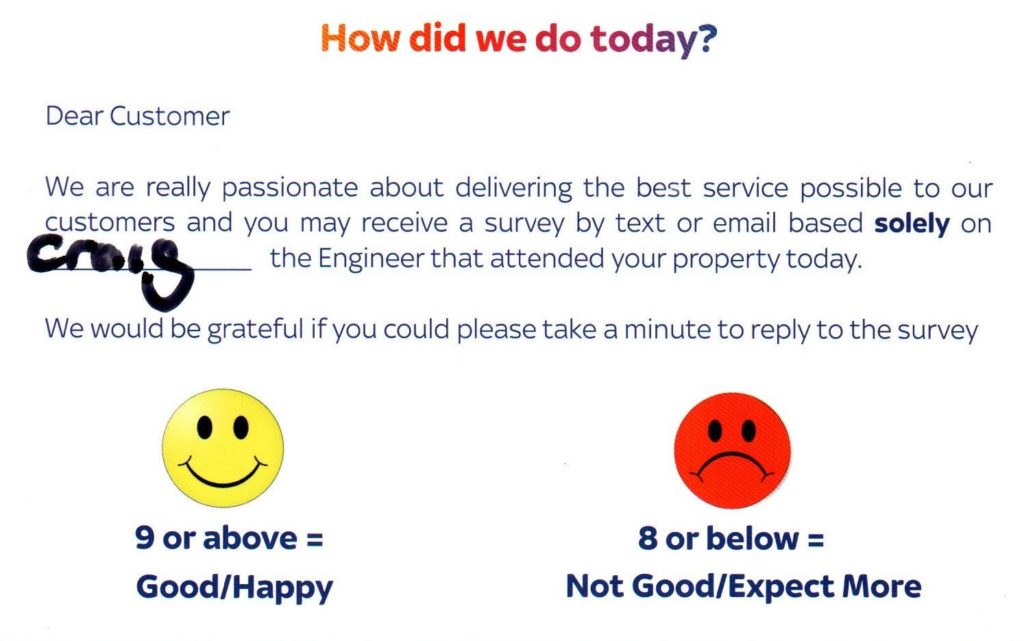

As we got into discussion, he then explained that I would receive a text survey but it would be specifically be about him, the engineer. And, even more specifically, any score that I gave would directly impact his personal pay/bonus. For extra help he handed me a card explaining what I needed to do!

The card clearly explained that the feedback was solely about my engineer, Craig. It had actually totally re-written the principle of NPS to effectively be a 2 point scale, such that good now fell into a score of 9 or above, and poor 0 to 8.

So I’m now in a dilemma, unless I gave him a 9 or 10 he would personally lose out, but how can you score 9 or 10 if the overall service or process was poor. Craig told me he’d prefer that I didn’t score him at all than to score him low!

So a day or so later my text arrives, but what do I do? Do I give him the great score that he deserved, but any complaint wouldn’t make sense? Or do I score low, in order my complaint about the process gets due attention, but make him lose out?

So my bugbear with programmes like this

So my bugbear with a programmes like this, is whether the organisation derives any value from the feedback process? For me the process and customer experience from the beginning to end was really not great:

- The information on the website wasn’t very clear

- The call to the order centre; whilst the operator was pleasant, I didn’t really get the information to make the right decision

- The second call to change my order, was a challenge

- The engineer was fantastic, but we both wasted the best part of 3 hours trying to resolve the issue

During this process I did receive a number of CX text survey requests, but in every case it was always about the ‘person’; “Please rate your engineer“, “Please rate the operator“…

In all cases the person involved was great, but the underlying processes, systems and bureaucracy a nightmare. And because the engineer told me the score impacted him personally, it made me question whether any customer responses ever reflected the true experience?

For me, I came away with very mixed feelings; my experience of the organisation itself would make me rate them in the low 2 or 3’s; the operator or the engineer more like 9 or 10’s. To save Craig from losing his bonus I decided not to provide feedback at all.

What did the organisation learn?

The overall result was the organisation didn’t learn anything from my experience, or how to solve similar issues. There was no differentiation between the service provided by the engineer or other parts of the experience. And I now have a lower opinion of the organisation which I do tell people about. Thus I’m spreading negative word of mouth to family, friends and peers.

Yes for sure mine is only an isolated piece of feedback that has been missed. But if I were the operations director, I’d want to know, and be able to fix it. So if the operations director from this organisation reads this blog and works out that it’s his organisation, please feel free to reach out. And we’ll happily help you rectify what seems some fundamental issues in your processes.

As a CX and insight professional, I find the idea of a card ‘prompting’ customers on how to score methodologically flawed. So if the Insight director is reading this, then perhaps we could also have a conversation?

Delivering return on investment in a CX programme

We achieve a return on investment in a CX programme by understanding the issues, acting upon them and keeping the customer happy. Sadly this was not the case in this example. Designing a good customer experience programme isn’t just about applying an NPS type approach, there’s far more to it.

To assist explain our view, we’ve produced the following free guide, ‘Promises, expectation and how to avoid the CX Gap’. In it we take a simple and practical look at the key elements of an effective customer experience programme and how to maximise the ROI.

To download a copy simply click on the image below.